Your favorite buzz word, “AI,” is once more in the news; this time because of the recent development of OpenAI’s ChatGPT.

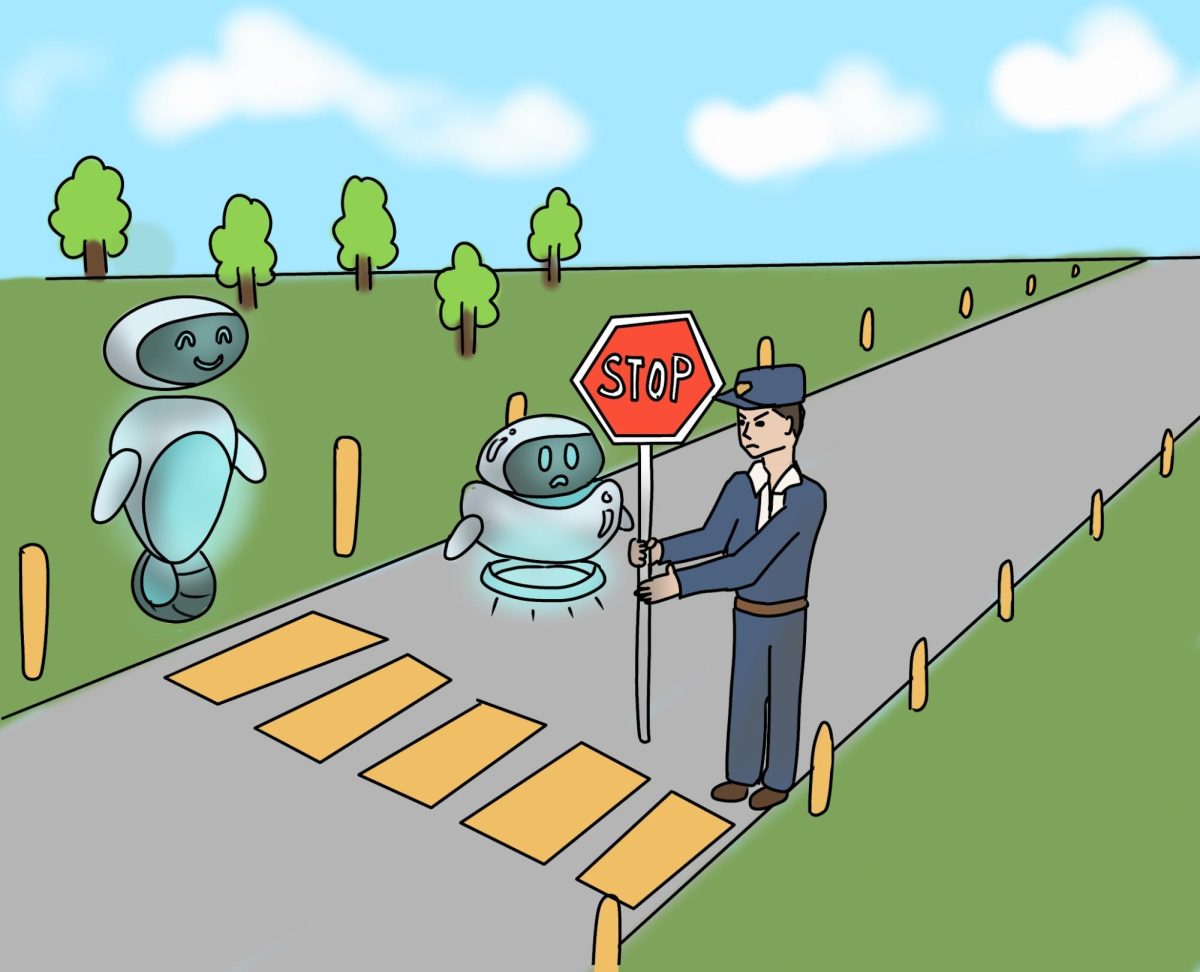

AI, or Artificial Intelligence, is an autonomous and adaptive computer system that possesses human-like attributes that make it, well, “intelligent.” Ethics has always been a concern in AI development, but now, with new capabilities and a new level of wide-spread accessibility, questions about regulation are at the forefront of the discussion.

AI cannot regulate itself, and AI regulation cannot be solely controlled by private companies – been there, done that, right? The 1870s, known as the “Gilded Age,” was an era in which the U.S. imposed little-to-no regulation on the private sector. Coming out of the first Industrial Age and at the dawn of the second Industrial revolution, the U.S. entered an unknown era of business; it was easy for small numbers of powerful and corrupt businessmen, or Robber Barons, to dominate specific industries and create monopolies. Their failures to self-regulate caused one of the largest gaps between the rich and poor that the U.S. has ever seen. This is to say that self-regulation is a risky business, especially when it comes to new technologies.

In the current era, there are few concrete definitions for what an “ethical” practice is in tech. Take social media platforms, which are infamous for a reluctance to censor “fake news,” citing murky arguments about the first amendment.

The growing AI industry has demonstrated similarities to its tech sector counterparts. Proponents of regulation argue that AI, which critics have noted is inherently biased, could cause discriminatory practices and even infringe on human rights.

Others worry that AI will threaten integrity in academics, arguing that AI will be used to produce high-quality essays and otherwise proliferate infringement on intellectual property. We need to examine this claim more carefully.

The possibility of plagiarism isn’t a new concern brought on by AI; the newfound fear of AI plagiarism simply exposes educators’ lack of trust in their students. Plagiarism in academia is not a new problem. It was facilitated in the early 2000s with widely accessible websites and in more recent times with services like Grammarly. The fact of the matter is that, with every new technology, this “risk” will no doubt be raised as a concern. This does not mean that we should regulate AI at a governmental level any more than we have regulated Grammarly or Sparknotes.

A fear-based response to AI regulation will cause more harm than good. While regulation will be necessary, danger lies in too abruptly rushing to regulate, especially in the early stages of this technology’s development.

Rushing to regulate the industry will detract from innovation. The best way to understand the risks AI may present is to continue with research and collection of data.

A slow and steady approach to regulation has previously proved successful. As Jeremy Straub wrote in “Elon Musk is Wrong About Regulating Artificial Intelligence,” “Humanity faced a similar set of issues in the early days of the internet. But the United States actively avoided regulating the internet to avoid stunting its early growth. Musk’s PayPal and numerous other businesses helped build the modern online world while subject only to regular human-scale rules, like those preventing theft and fraud.” What is history but a cycle of learning? And in technology and now AI, it will be critical for us to follow previous roads to determine what we want to regulate.

Transparency will be our best friend. With a hands-off approach as research and innovations continues, it will be crucial for companies to proceed with wariness.

We must recall that companies paving the road for AI are already bound by existing legislation. However, in the coming years, government and big companies will need to make limited interventions in the industry to see the best, and maybe even life-changing, outcomes.